Prompt engineering is the practice of designing clear, effective prompts for AI tools like ChatGPT and GPT-4 so they deliver accurate, useful, and creative results. This 15-minute guide explains what prompt engineering is, why it matters, and how thoughtful prompt design can boost productivity and search visibility. By treating the prompt as a programmable interface where wording, context, and structure are intentional, you can consistently draw better responses from large language models.

At its core, prompt engineering recognizes that language models do not think or understand in the human sense. They generate text based on patterns learned from vast training data. A prompt is the handle we use to influence which patterns the model activates. By describing the task clearly, specifying constraints, or offering examples, we reduce ambiguity and increase the chance of getting a helpful answer. The quality of the prompt often determines whether the model behaves like a brilliant assistant or a confused guesser.

Why prompts matter

Early language model demos seemed almost magical. Yet behind every impressive result was a well crafted prompt. The same model can deliver wildly different outputs when given slightly different wording. Prompts set the stage: they tell the model what role to play, what tone to use, which details to focus on, and what format the answer should take. A vague prompt like “Tell me about cars” yields a generic paragraph. A focused prompt that names the audience, the purpose, and desired level of detail produces something far more targeted.

In professional settings, prompt quality has direct business impact. A customer support bot must reply with accurate policy information and an appropriate tone. A research assistant must cite sources and avoid hallucinated facts. A marketing writer must stay on brand. When the stakes are high, teams cannot rely on improvised wording. They design prompts deliberately, test variations, and maintain libraries of battle-tested instructions. Good prompt engineering turns language models from novelty gadgets into dependable tools. Organizations looking to implement these capabilities can explore comprehensive AI solutions and integration services to maximize their return on investment.

Building blocks of effective prompts

Effective prompts share several common ingredients. First is clear intent: the model should know exactly what task it is performing. Stating the goal up front reduces the chance of digression. Second is context. Models respond better when given relevant background information, such as audience characteristics or domain specifics. Third is output guidance. If a response should follow a template, include the template in the prompt. Asking for “Give me a JSON object with these keys” or “Respond using bullet points” nudges the model to adopt the requested structure.

Many prompts also employ examples, a technique known as few-shot prompting. By providing sample input-output pairs, we demonstrate the pattern we expect the model to follow. For instance, a sentiment analysis prompt might show a short review labeled “Positive” and another labeled “Negative” before presenting the text to be classified. Examples give the model a miniature training set tailored to the task at hand.

Iterative prompt design

Prompt engineering is rarely a one-and-done activity. Like any design process, it benefits from iteration. A typical workflow begins with a straightforward prompt to establish a baseline. After reviewing the output, we adjust wording, add constraints, or supply extra context. Each iteration tests a hypothesis about what the model needs. Over time the prompt evolves into a concise set of instructions that consistently yield the desired result. Keeping notes on what works and what fails turns ad-hoc experimentation into a repeatable methodology.

Iteration also helps uncover model limitations. If repeated tweaks cannot coax a reliable answer, the task may exceed the model’s capabilities or require a different approach, such as breaking it into smaller subtasks. Prompt engineers learn to recognize when to stop polishing a single prompt and instead orchestrate a sequence of prompts that build on one another. This decomposition can transform a complex request into a manageable pipeline.

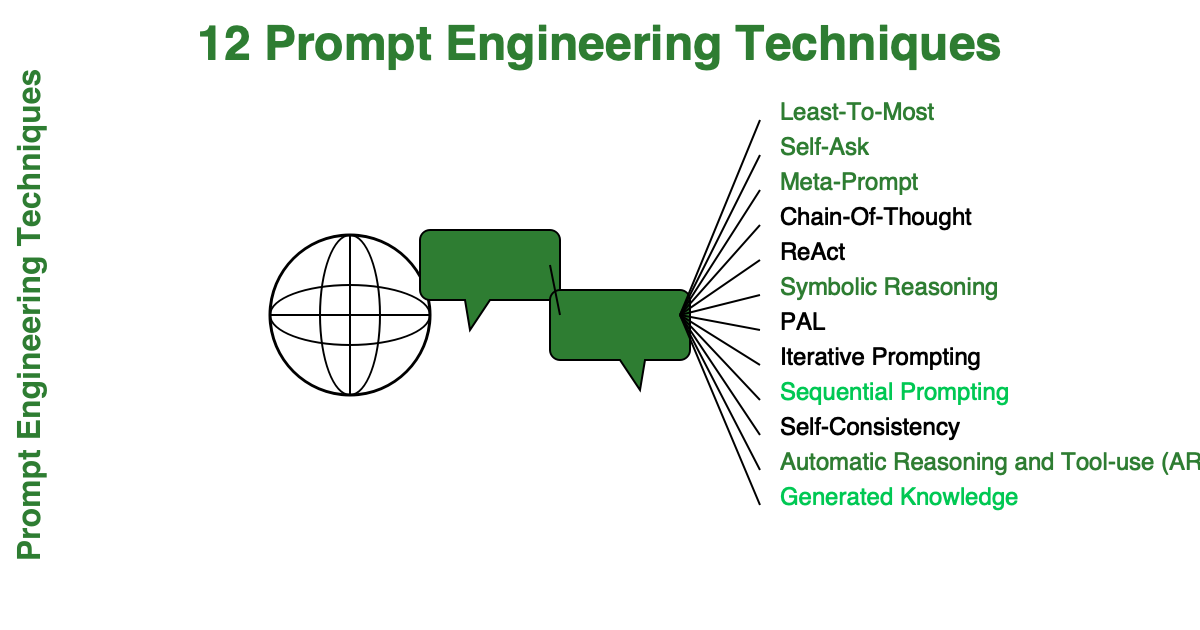

Advanced strategies

As practice matures, engineers adopt advanced strategies to push models further. One popular technique is chain-of-thought prompting, which explicitly asks the model to reason step by step. Instead of jumping straight to a final answer, the model narrates its reasoning process, leading to more accurate and transparent results. Another approach is role prompting, where the prompt casts the model as a specific persona—a helpful teacher, a strict editor, or a witty storyteller. Personas shape vocabulary and tone, making the output feel more tailored.

Structured output is another area of focus. By combining precise instructions with examples, prompts can elicit tables, code snippets, or machine-readable data formats. Some teams supplement prompts with programmatic validation, automatically checking that the model’s output matches a schema. When the model deviates, the system can retry with corrective instructions or fall back to a safer response. These patterns illustrate how prompt engineering bridges freeform language and formal software requirements.

Applications across domains

Prompt engineering is not confined to chat interfaces. Developers use prompts to generate code, write documentation, summarize research papers, and even create synthetic data for training other models. In education, instructors craft prompts that encourage students to ask deeper questions or explore multiple viewpoints. In journalism, reporters experiment with prompts that surface surprising angles while preserving factual accuracy. Each domain brings its own vocabulary and conventions, so prompts must adapt accordingly. Businesses seeking to implement domain-specific AI applications can benefit from professional AI development and machine learning services that understand these nuanced requirements.

Consider software development. A vague request like “Write a login form” might produce an incomplete snippet. A refined prompt would specify the framework, the authentication method, accessibility requirements, and error handling strategy. For data analysis, prompts can include sample datasets and explicit instructions on which statistical measures to compute. The same principles apply to creative writing, where prompts can define character traits, narrative arcs, or stylistic influences to keep the model on track.

Challenges and limitations

Despite its power, prompt engineering has limitations. Models sometimes ignore instructions, particularly when prompts grow long or contain conflicting cues. They may also fabricate facts, a phenomenon known as hallucination. Engineers counter these issues by keeping prompts concise, verifying outputs with external tools, and providing explicit disclaimers when uncertainty is acceptable. Ethical considerations are equally important: prompts should avoid reinforcing harmful biases or generating misleading content.

Another challenge is transferability. A prompt tuned for one model may behave differently on another. Even within the same family of models, updates to training data or decoding parameters can shift behavior. Effective prompt engineers document assumptions and monitor model versions just as software engineers track dependencies. The field is still young, and best practices continue to evolve as more organizations share their experiences.

Tooling and collaboration

As teams scale their use of language models, tooling becomes vital. Version control systems can store prompt templates alongside code. Evaluation dashboards help compare outputs from different prompt variants. Some platforms offer visual interfaces for assembling multi-step prompt workflows, enabling non-programmers to experiment safely. Collaboration is crucial: subject matter experts supply domain knowledge, while engineers translate that knowledge into precise instructions for the model.

Documentation plays a central role in collaboration. A well documented prompt includes its purpose, expected inputs and outputs, known limitations, and revision history. Treating prompts as shared assets encourages reuse and continuous improvement. In mature teams, prompt engineering becomes part of the standard development cycle, with code reviews and testing extending to natural language instructions.

The future of prompt engineering

Looking ahead, prompt engineering will likely blend with other forms of AI alignment. Researchers are exploring ways to let models learn from human feedback more directly, reducing the need for elaborate prompts. Yet even as models become more capable, the ability to communicate goals clearly will remain essential. Prompt engineering teaches us to express intentions precisely, a skill that transcends any particular model or interface.

We can expect richer tools for managing prompts at scale: linters that detect ambiguous wording, simulators that predict model behavior, and integrations that link prompts with analytics pipelines. Education will also expand, with curricula that treat prompt engineering as a core competency for knowledge workers. The field sits at the intersection of linguistics, design, and software engineering, making it an exciting frontier for interdisciplinary collaboration.

Conclusion

Prompt engineering transforms language models from black boxes into customizable instruments. By understanding how models interpret language, we can steer them toward outputs that serve real human needs. The discipline demands clarity, creativity, and a willingness to iterate. As models grow more integral to everyday work, those who can communicate with them effectively will shape the next wave of innovation. Mastering prompt engineering is not just about talking to machines—it is about refining our own ability to articulate ideas and solve problems through language.

Ready to implement AI solutions in your organization? Discover how expert AI consulting and development services can help you harness the power of prompt engineering and large language models to drive innovation and efficiency in your business operations.

Ready to Implement AI and Prompt Engineering?

Let our AI experts help you harness the power of prompt engineering and large language models to drive innovation and efficiency in your business operations.