Intelligent AI Solutions for Modern Enterprises

From agentic AI assistants to private LLM deployments — we build, deploy, and support enterprise AI solutions that transform how Malaysian businesses operate.

Certified AI Hardware Solutions

We partner with leading hardware vendors to deliver complete AI infrastructure — from workstations to enterprise GPU servers. View all partners →

ThinkStation PX SFF

AI Workstation Partner

Compact AI workstation with NVIDIA RTX professional GPUs. Perfect for on-premise LLM inference and development.

- Up to 2x NVIDIA RTX 6000 Ada

- Intel Xeon W processors

- Small form factor design

- Enterprise reliability

Ascent GX10

AI Workstation Partner

High-performance AI server optimized for large language model workloads. Scalable GPU compute for enterprise deployments.

- Multi-GPU configurations

- Optimized for LLM inference

- Enterprise-grade cooling

- 24/7 operation certified

🔧 Full-Stack AI Deployment — We handle software, hardware procurement, installation, and ongoing support. One partner for your complete AI infrastructure.

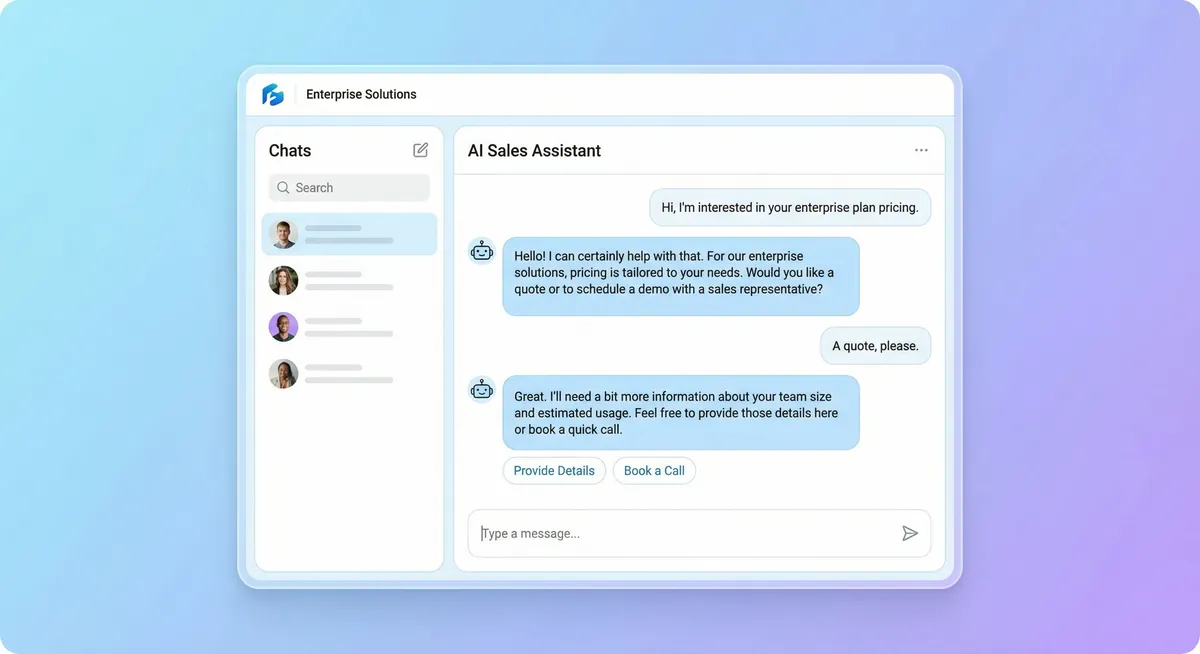

EzyChat AI Assistants

Production-ready AI assistants for sales automation and enterprise knowledge management. Choose SaaS or on-premise deployment.

EzyChat

SaaS PlatformMulti-Tenant Sales Assistant

AI-powered sales assistant SaaS platform. Automate customer inquiries, qualify leads, and close sales 24/7 across WhatsApp, website chat, and social media.

- Multi-channel deployment (WhatsApp, Web, Social)

- Intelligent lead qualification & routing

- Product catalog integration

- Multi-tenant architecture for agencies

- Analytics dashboard & reporting

EzyChat Business

EnterprisePrivate LLM for Enterprises

Enterprise-grade AI assistant powered by your own private LLM. Complete data sovereignty with on-premise deployment. Your data never leaves your infrastructure.

- 100% on-premise deployment

- Private LLM with your company data

- Document generation & automation

- Knowledge base integration

- Enterprise SSO & access control

AI Solutions for Every Need

From conversational AI to enterprise automation — we have the expertise to build, deploy, and support your AI initiatives.

Agentic AI Development

Build autonomous AI agents that can reason, plan, and execute complex multi-step tasks. From customer service to operations automation.

Private LLM Deployment

Deploy open-source LLMs on your own infrastructure with vLLM. Complete data privacy with no external API calls. Your data stays yours.

RAG & Knowledge Systems

Build intelligent knowledge management systems that understand your documents, policies, and data. Accurate answers grounded in your information.

Intelligence Detection

AI-powered detection systems for fraud, anomalies, sentiment, and patterns. Turn your data into actionable intelligence.

Document Automation

Automate document creation, extraction, and processing. Generate contracts, reports, and proposals with AI assistance.

AI Integration Services

Integrate AI capabilities into your existing systems. Connect LLMs to your ERP, CRM, and business applications.

Malaysia's AI Integration Specialists

We combine deep AI expertise with local presence to deliver enterprise solutions that work for Malaysian businesses.

100% Data Privacy

Your data never leaves your infrastructure. We deploy AI on your servers with air-gapped options available. No cloud dependencies required.

Production-Ready vLLM

Enterprise-grade inference with vLLM framework. High throughput, low latency, and optimized for production workloads on standard GPU hardware.

All Open Source LLMs

Support for Llama, Qwen, Mistral, DeepSeek, and more. Choose the best model for your use case without vendor lock-in.

Local Malaysian Team

No overseas support. Our AI engineers are based in Malaysia for responsive support, on-site deployment, and ongoing optimization.

End-to-End Delivery

From requirements to production deployment. We handle infrastructure, model selection, fine-tuning, integration, and ongoing maintenance.

Transparent Pricing

No hidden API costs or per-token charges. One-time deployment with optional support contracts. You own the infrastructure.

AI Solutions Across Industries

We've deployed AI solutions across diverse sectors, each with unique requirements and compliance needs.

Finance & Banking

Manufacturing

Retail & E-commerce

Legal & Professional

Healthcare

Government & GLC

Education

Logistics & Supply Chain

Frequently Asked Questions

Common questions about our AI solutions and deployment approach.

How do you ensure data privacy with AI solutions?

We specialize in on-premise deployments where your data never leaves your infrastructure. Using vLLM and open-source models, we deploy AI entirely within your network — no external API calls, no cloud dependencies. For organizations with strict compliance requirements, we offer air-gapped deployments with no internet connectivity.

What LLM models do you support?

We support all major open-source LLMs including Llama 3.1/3.2, Qwen 2.5, Mistral, DeepSeek, and more. Our vLLM infrastructure can run any Hugging Face compatible model. We help you select the best model based on your use case, language requirements (including Bahasa Malaysia and Chinese), and hardware constraints.

What hardware is needed for private LLM deployment?

Requirements depend on model size and throughput needs. For small deployments, a single NVIDIA RTX 4090 can run 7B-13B models effectively. For enterprise workloads, we typically recommend A100 or H100 GPUs. We can also help you set up efficient CPU-based inference for lower-volume use cases.

How long does it take to deploy an AI solution?

A basic EzyChat deployment can be completed in 1-2 weeks. Custom agentic AI development typically takes 4-8 weeks depending on complexity. Full private LLM infrastructure with RAG systems usually requires 6-12 weeks including data preparation, model fine-tuning, and integration testing.

What ongoing support do you provide?

We offer flexible support packages including system monitoring, model updates, performance optimization, and troubleshooting. For production systems, we recommend our managed service tier which includes 24/7 monitoring, automatic scaling, and priority support with guaranteed response times.

How does pricing work for AI solutions?

Unlike cloud AI services with per-token pricing, our on-premise solutions have predictable costs. You pay for initial deployment and optional ongoing support. There are no API fees or usage-based charges — once deployed, the infrastructure is yours to use without limits.

Ready to Transform Your Business with AI?

Schedule a consultation to discuss your AI requirements. Our team will help you identify the right solutions — from ready-to-deploy products to custom development.